Bounded computation and the nature of intelligence

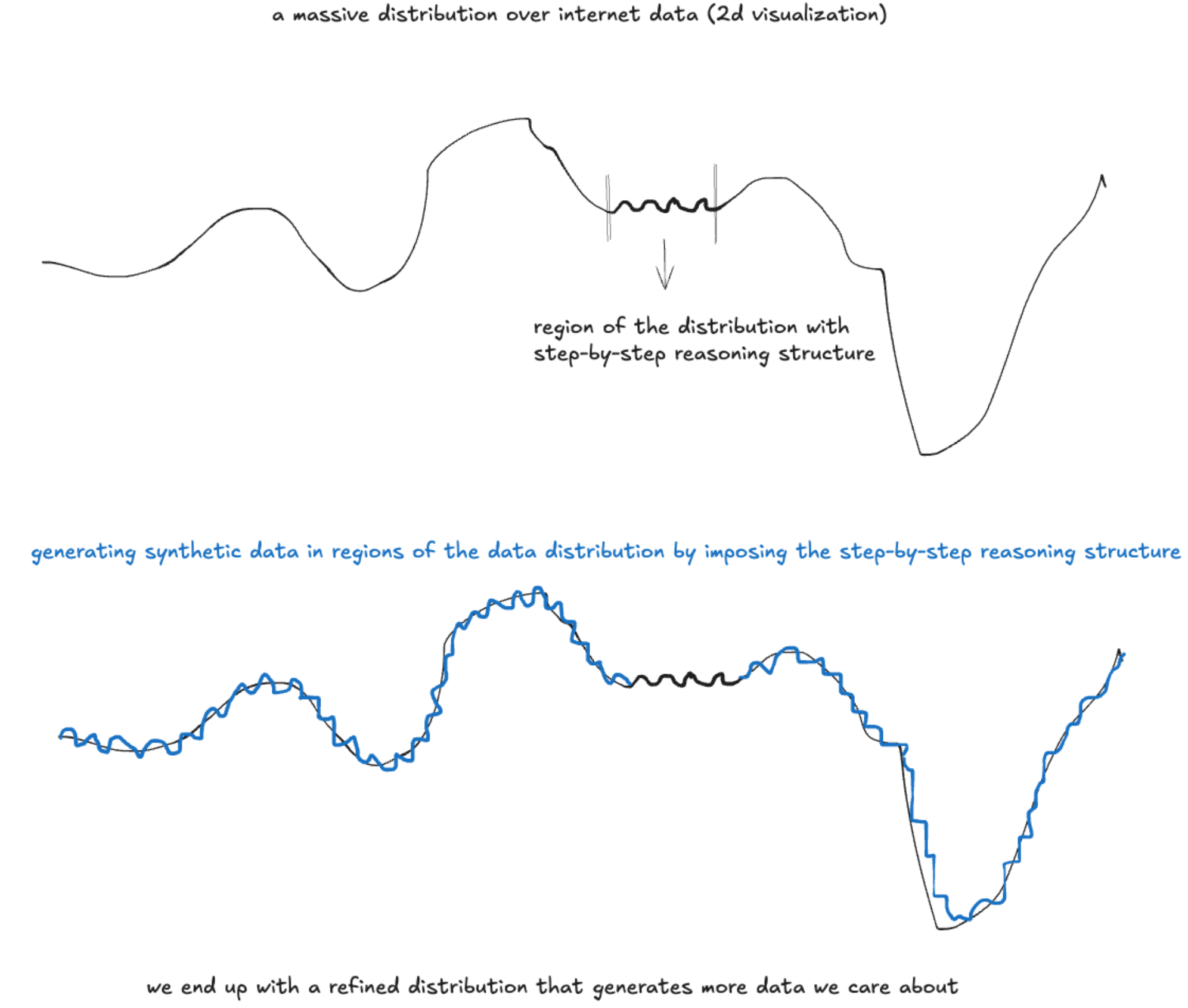

I had a conversation recently that seemed to have cleared a lot of things up for me. I will try to go through that conversation here to see whether I can recover the insights we seemed to have landed on. The topic started on the question of the usefulness of generating synthetic data with large language models to improve their performance and train a new generation of more capable models. This clearly seems to work to a certain extent [1, 2, 3, 4]. Chain-of-thought reasoning, step-by-step reasoning, STaR, and all these other methods improve LLM performance on tasks that we (humans) care about. I don’t really understand why this works though and have not yet seen a compelling explanation grounded in first principles. Right now, it seems like we’re taking concepts and intuitions from psychology or cognitive science (or common sense) and are applying them to LLMs to see if it leads to better performance, and it does in some cases. Maybe it’s the nature of the data we’re dealing with. Human generated language data has structure, and the types of things we care about LLMs being able to do, like reasoning tasks, have even more structure, so enforcing that structure on areas in the vast representation space formed by LLMs where it’s not inherently present might make those areas more salient from the human point of view. To say this more concisely, the LLM has learned a giant probability distribution for language from the internet. Most of this internet data does not have reasoning-style structure (like chain-of-thought), but the subsets of the data that do are also important for humans (whether we’re talking about step-by-step solutions to coding problems or an explanation of a detective’s investigation), so generating more data with the LLM by enforcing chain-of-thought within domains that were not previously populated with this structure leads to improving the LLM performance in such a way that humans care about. I think there’s probably more to it than that, maybe something to do with the complexity of the computation (chain-of-thought takes longer than zero-shot) or the information content of the states traversed during different reasoning regimes (though here information content needs to again be defined with respect to what humans care about). Here’s a simplified illustration of what I mean by this:

This starts to paint a picture that synthetic data won’t actually lead to AGI, though that’s not to say that it won’t lead to significant advancements from current models. To reiterate, I don’t think we understand why these methods work so well yet. Plus, we don’t know how to approach the problem of discovering other ways of adding structure to the base dataset to improve the models in ways that we care about. Though I do think it’s pretty safe to say that given a finite-size neural network and a fixed original dataset, the capabilities that may be reached are fundamentally bounded, just by considering the capacity of the model and the degrees of freedom afforded by the dataset. Aside: going back to the image, you may generate synthetic data so as to push the distribution to whatever shape you want, but it’s probably going to be difficult once we have an initial learned distribution from pretraining, plus it’s unclear what “shape” is actually useful. The natural question to ask when pondering the scaling laws of artificial systems is to consider how humans learn and develop intelligence over time, even though humans are very different from AIs (though I think the story I’m about to lay down is very relevant from a first principles way of thinking about this problem). Humans have bounded computational capacity. You can only focus on a few things at a time, get so much done during the day, you learn more quickly when you’re young and slower when you’re old. Humans actually do remarkably many things with very few computational resources. How do we do it? For starters, we live in an environment that we can change over time. I think thoughts, I reason, but I don’t have to keep all of that in my head all the time, I can write thoughts on a computer and then forget about them to focus on other things, or return to them later (this is what I’m essentially doing now). Writing is one example, but in fact most of what humans do is restructuring our environment in such a way so as to offload computational costs to our environment. These costs can be physical like hunting for food or mental like figuring out a math problem, both of which need to be orchestrated by the brain. Instead of having to spend significant amount of energy on hunting, we developed farming, and instead of needing to perform complicated calculations in your head, we have many tools to help us offload those costs, from writing sets of equation manipulations on a piece of paper to computing arithmetic on calculators to having computers do these things for us. All of these things free our biological computational apparatus to focus on other problems. Similarly each new generation comes with a clean slate and learns things in a different context from the previous generation. I never had to learn to use computers the same way that my parents had to, for me it was just something that always existed, I didn’t know any other life, so I learned to perform tasks on computers without spending as much time learning the tasks that computers made much easier. Similarly, the next generation is growing up with LLMs and they will not see this technology the way we do, their mental development will be fundamentally different from that aspect and they will learn to use these things in different ways and will focus on different problems, and will figure out new ways to offload their tasks to their environment, until the next generation comes and on and on it goes. I think this process is fundamental to the continuous development of global collective intelligence and it’s currently unclear how we will replicate that with AI other than by retraining and finetuning these models as we figure out new problems we want to apply them to. LLMs seem to forget at some point if you overtrain them, which might be relevant to this general topic.

This starts to paint a picture that synthetic data won’t actually lead to AGI, though that’s not to say that it won’t lead to significant advancements from current models. To reiterate, I don’t think we understand why these methods work so well yet. Plus, we don’t know how to approach the problem of discovering other ways of adding structure to the base dataset to improve the models in ways that we care about. Though I do think it’s pretty safe to say that given a finite-size neural network and a fixed original dataset, the capabilities that may be reached are fundamentally bounded, just by considering the capacity of the model and the degrees of freedom afforded by the dataset. Aside: going back to the image, you may generate synthetic data so as to push the distribution to whatever shape you want, but it’s probably going to be difficult once we have an initial learned distribution from pretraining, plus it’s unclear what “shape” is actually useful. The natural question to ask when pondering the scaling laws of artificial systems is to consider how humans learn and develop intelligence over time, even though humans are very different from AIs (though I think the story I’m about to lay down is very relevant from a first principles way of thinking about this problem). Humans have bounded computational capacity. You can only focus on a few things at a time, get so much done during the day, you learn more quickly when you’re young and slower when you’re old. Humans actually do remarkably many things with very few computational resources. How do we do it? For starters, we live in an environment that we can change over time. I think thoughts, I reason, but I don’t have to keep all of that in my head all the time, I can write thoughts on a computer and then forget about them to focus on other things, or return to them later (this is what I’m essentially doing now). Writing is one example, but in fact most of what humans do is restructuring our environment in such a way so as to offload computational costs to our environment. These costs can be physical like hunting for food or mental like figuring out a math problem, both of which need to be orchestrated by the brain. Instead of having to spend significant amount of energy on hunting, we developed farming, and instead of needing to perform complicated calculations in your head, we have many tools to help us offload those costs, from writing sets of equation manipulations on a piece of paper to computing arithmetic on calculators to having computers do these things for us. All of these things free our biological computational apparatus to focus on other problems. Similarly each new generation comes with a clean slate and learns things in a different context from the previous generation. I never had to learn to use computers the same way that my parents had to, for me it was just something that always existed, I didn’t know any other life, so I learned to perform tasks on computers without spending as much time learning the tasks that computers made much easier. Similarly, the next generation is growing up with LLMs and they will not see this technology the way we do, their mental development will be fundamentally different from that aspect and they will learn to use these things in different ways and will focus on different problems, and will figure out new ways to offload their tasks to their environment, until the next generation comes and on and on it goes. I think this process is fundamental to the continuous development of global collective intelligence and it’s currently unclear how we will replicate that with AI other than by retraining and finetuning these models as we figure out new problems we want to apply them to. LLMs seem to forget at some point if you overtrain them, which might be relevant to this general topic.

- I also wanted to mention that our collective computation is distributed, multiple humans can interact to figure out a problem collectively, because each human brings different information and computational ability to the table.

Citation

@misc{smékal2024,

author = {{Jakub Smékal}},

title = {Bounded Computation and the Nature of Intelligence},

date = {2024-11-26},

url = {https://jakubsmekal.com/writings/261124_in_context/},

langid = {en}

}